Mozilla DeepSpeech Engine in Flutter Using Dart FFI

Breaking language boundaries by directly integrating C/C++ code in Flutter apps.

In this article, we will create a flutter plugin that calls native C/C++ library using Dart FFI. We will use Mozilla's DeepSpeech C library as an example to show the implementation. First let's have a very brief overview about FFI and DeepSpeech.

Foreign Function Interfaces (FFI)

Foreign function Interface is a mechanism with which function written in one language can be called from code written in another language.

With the release of Dart 2.5 back in September, it added the beta support for calling C code directly from dart, and is now marked as stable with Dart 2.12 release.

Mozilla DeepSpeech

Mozilla's Project DeepSpeech is an Open-Source Speech-To-Text engine, using a model trained by machine learning techniques based on Baidu's Deep Speech research paper. It is one of the best Open-Source alternatives to Google's Speech Recognition APIs, and can completely run offline from low power devices upto high end systems.

Prerequisite

This article assumes you have some hands on with writing basic flutter apps, and are familiar with C code.

To compile C code for different platforms, the number of tools required: CMake, Ninja, Android NDK and Xcode. Make sure Android NDK is set in the path.

Let's get started

In this article, we will be showing the step-by-step guide to integrate DeepSpeech C library in Flutter. In fact, these steps can be helpful to integrate other C/C++ libraries also.

Following are the high level overview of the steps that are needed for the complete implementation. We'll discuss every step in details. Here is the example repo to follow through.

- Get DeepSpeech 0.9.3 binaries and models.

- Create our own C library wrapper that adds additional logic.

- Compile our C library for android and iOS. (This will generate .so files and iOS static framework)

- Write flutter plugin that uses our C library.

1. Getting DeepSpeech 0.9.3 binaries and models.

Fortunately, Mozilla already provides pre-built binaries for Android. You can directly download these from their official repository. Here is the link for armeabi-v7a and arm64-v8a shared libraries.

For iOS, head over to this link for information on how to generate iOS framework. For the convenience, I have already gathered the required files in libdeepspeech_0.9.3 folder in the repo.

NOTE: If you are integrating some other library that does not provide pre-built files, you can check the third step for building shared libraries from source code.

You can place your files according to the below directory structure.

libdeepspeech_0.9.3

├── android

│ ├── arm64-v8a

│ │ └── libdeepspeech.so

│ └── armeabi-v7a

│ └── libdeepspeech.so

├── deepspeech.h

└── deepspeech_ios.framework

└── deepspeech_ios

Download english pre-trained model here, we'll use this model to convert speech to text.

2. Creating our C library

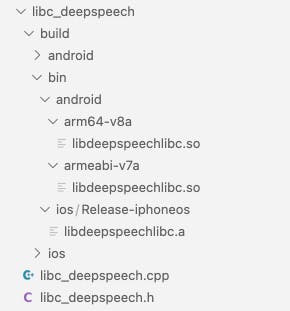

Every C library contains a header file (it has function declarations) and a source file (function definitions). Let's start by creating a folder libc_deepspeech, which has a libc_deepspeech.h header file and libc_deepspeech.cpp source file. Create a build folder that will contain subfolders android and ios containing CMake build scripts (More on that later...).

Now, your directory structure should look like this.

libc_deepspeech

├── build

│ ├── android

│ │ └── CMakeLists.txt

│ └── ios

│ ├── CMakeLists.txt

├── libc_deepspeech.cpp

└── libc_deepspeech.h

There are few things we need to do first in libc_deepspeech.h. Paste the below code

#pragma once

#define EXPORTED __attribute__((visibility("default"))) __attribute__((used))

#ifdef __cplusplus

extern "C" {

#endif

// Your function declarations go here...

#ifdef __cplusplus

}

#endif

#pragma onceis the include guard, which basically prevents double inclusion of library. You can read more here about include guards and why they are needed.#define EXPORTED __attribute__((visibility("default"))) __attribute__((used)). This is very important here. This line of code tells the compiler to retain the function in object file, even if it is unreferenced.- Lastly, all declarations are wrapped in extern "C" {} to disable Name Mangling, so that our Dart code can find the reference by original function names.

Let's add these functions to our library.

EXPORTED char *deepspeech_verison(void);

EXPORTED void *create_model(char *model_path);

EXPORTED uint64_t model_sample_rate(void *model_state);

EXPORTED char *speech_to_text(void *model_state, char *buffer, uint64_t buffer_size);

You can see that every function is prefixed with EXPORTED to prevent the unreferenced issues.

create_model returns

void *. This is done because we need thisModelState, and passing the struct back and forth from C-Dart is not feasible. Hence, onlypointerto ModelState is maintained on dart side. Whenever needed, it is casted back to ModelState on C side.

Now, let's write the implementations for these functions. Open libc_deepspeech.cpp add the following code.

void *create_model(char *model_path)

{

ModelState *ctx;

int status = DS_CreateModel(model_path, &ctx);

return (void *)ctx;

}

char *speech_to_text(void *model_state, char *buffer, uint64_t buffer_size)

{

Metadata *result = DS_SpeechToTextWithMetadata((ModelState *)model_state, (short *)buffer, buffer_size / 2, 3);

const CandidateTranscript *transcript = &result->transcripts[0];

std::string retval = "";

for (int i = 0; i < transcript->num_tokens; i++)

{

const TokenMetadata &token = transcript->tokens[i];

retval += token.text;

}

char *encoded = strdup(retval.c_str());

DS_FreeMetadata(result);

return encoded;

}

Similarly, you can complete the implementation for deepspeech_verison and model_sample_rate.

3. Compiling library

We need to install few tools to prepare for compilation. Install CMake, Ninja, Android NDK and Xcode. Make sure Android NDK is set in the path. You can refer to their docs for how to install these on your OS.

Also, download the iOS toolchain file from here and place it in build/ios.

Compiling for iOS

Paste the following code in ios/CMakeLists.txt

cmake_minimum_required(VERSION 3.4.1)

FILE(GLOB SRC ../../*.cpp)

add_library(deepspeechlibc STATIC ${SRC})

include_directories(../../)

find_library(DEEPSPEECH_LIB NAMES deepspeech_ios HINTS "../../../libdeepspeech_0.9.3")

target_link_libraries(deepspeechlibc ${DEEPSPEECH_LIB})

In third line, we are building a STATIC iOS library called deepspeechlibc. Then, in line 5 and 6, we are finding the path for deepspeech_ios.framework and linking it to our library.

Then, in build folder, run the following commands on terminal.

$ cmake -Sios -Bbin/ios -G Xcode -DCMAKE_TOOLCHAIN_FILE=ios.toolchain.cmake -DPLATFORM=OS64

$ cmake --build bin/ios --config Release

Compiling for Android

Similarly for android, paste the following in android/CMakeLists.txt

cmake_minimum_required(VERSION 3.4.1)

FILE(GLOB SRC ../../*.cpp)

add_library(deepspeechlibc SHARED ${SRC})

include_directories(../../)

add_library(libdeepspeech SHARED IMPORTED)

get_filename_component(ABI_LIB_PATH ../../../libdeepspeech_0.9.3/android/${ANDROID_ABI}/libdeepspeech.so ABSOLUTE)

set_target_properties( libdeepspeech PROPERTIES IMPORTED_LOCATION ${ABI_LIB_PATH} )

include_directories( ../../../libdeepspeech_0.9.3/ )

target_link_libraries( deepspeechlibc libdeepspeech )

Here, we are doing the same thing as iOS i.e., compiling deepspeechlibc and linking with Mozilla's android library.

In build folder, run the following command on terminal to build for arm64-v8a architecture.

$ cmake -DCMAKE_TOOLCHAIN_FILE=$ANDROID_NDK/build/cmake/android.toolchain.cmake -G Ninja -DANDROID_NDK=$ANDROID_NDK -DANDROID_ABI=arm64-v8a -DANDROID_PLATFORM=android-29 -Sandroid -Bbin/android/arm64-v8a

$ cmake --build bin/android/arm64-v8a

NOTE: You can build for armeabi-v7a too. Just rerun the above command by replacing with ANDROID_ABI=armeabi-v7a

After this, folder structure show look like this now.

All Done! Let's integrate our library with flutter.

4. Linking with Flutter

We will use the dart:ffi library to call native C APIs in flutter. We will also include ffi library in pubspec.yaml as in contains various utility functions while working with foreign function interfaces.

Let's create one flutter plugin deepspeech_flutter. Checkout the official Flutter documentation on how to create flutter plugin supporting Android and iOS platform.

iOS

- Create two folders,

libs/arm64andFrameworksunder iOS folder. - Copy

deepspeech_ios.frameworkfromlibdeepspeech_0.9.3into Frameworks. Copylibdeepspeechlibc.athat we compiled above tolibs/arm64. - Copy

libc_deepspeech.hfromlibc_deepspeechin Classes folder.

Open deepspeech_flutter.podspec file and add the following lines.

s.public_header_files = 'Classes/**/*.h'

s.ios.vendored_library = 'libs/arm64/libdeepspeechlibc.a'

s.ios.vendored_frameworks = 'Frameworks/deepspeech_ios.framework'

# Update s.pod_target_xcconfig

s.pod_target_xcconfig = { 'DEFINES_MODULE' => 'YES', 'EXCLUDED_ARCHS[sdk=iphonesimulator*]' => 'i386', 'OTHER_LDFLAGS' => '-lc++ -framework deepspeech_ios' }

Here's the tricky part. For our library to be able to statically link to the app executable, we need to call one function from our C library in the swift code (This is done so that Xcode does not strip library symbols when building the app).

Open SwiftDeepspeechFlutterPlugin.swift, and call any function. Let's say we call let res = deepspeech_verison(). That's it.

Android

Android is a bit easier.

- Create

libsfolder underandroid. Create two subfoldersarm64-v8a, andarmeabi-v7a. In each of these folders, copy-paste the.sofiles that we built. Also, copy deepspeech 0.9.3.sofiles that we downloaded from its release page in step 1. - Open

build.gradle, paste this line undersourceSets.main.jniLibs.srcDir "${project.projectDir.path}/libs"

Dart

Now that we have linked shared libraries in flutter plugin, we can call functions from our C library in dart.

Firstly, load the library into memory using the below code snippet.

DynamicLibrary _deepspeech;

_deepspeech = Platform.isAndroid ? DynamicLibrary.open("libdeepspeechlibc.so") : DynamicLibrary.process();

Then lookup the function named speech_to_text and store its reference in _dsSpeechToText variable.

typedef NativeSpeechToText = Pointer<Utf8> Function(Pointer, Pointer<Uint8>, Uint64);

typedef SpeechToText = Pointer<Utf8> Function(Pointer, Pointer<Uint8>, int);

SpeechToText _dsSpeechToText;

_dsSpeechToText = _deepspeech.lookupFunction<NativeSpeechToText, SpeechToText>('speech_to_text');

Note: Two signatures are passed to

lookupFunction: NativeSpeechToText and SpeechToText. First one means, what data types we have defined on C side, and second one is what they correspond to on Dart side.

Similarly, you can lookup other functions as well.

_dsVersion = _deepspeech.lookupFunction<DSVersion, DSVersion>('deepspeech_verison');

_dsCreateModel = _deepspeech.lookupFunction<CreateModel, CreateModel>('create_model');

_dsModelSampleRate = _deepspeech.lookupFunction<NativeModelSampleRate, ModelSampleRate>('model_sample_rate');

We have reference to native C functions now. It's time to call them in dart. Create one function in the plugin class called getVersion(), that will return 0.9.3 (i.e., version of DeepSpeech lib)

String getVersion() {

Pointer<Utf8> _version = _dsVersion();

String value = Utf8.fromUtf8(_version);

return value;

}

In above code snippet, _dsVersion() returns the pointer to string. We convert this to dart String type using Utf8.fromUtf8() and return the value.

Calling native C code is quite fast, but AVOID memory leaks. Remember to free the memory that was allocated using

malloc.

TIP: You should create one FFI that calls free so that we can free the memory on dart side when we are done. This will help prevent memory leaks and unwanted crashes.

Now, we can call functions from the plugin in our flutter app.

Conclusion

That's it! We have successfully linked DeepSpeech with Flutter. You can check out my Github repo for the example app that includes converting speech to text from the WAV files.

Thanks a lot for reading. 😃

Thanks a lot for reading. 😃