A Guide To Duplicity: Part 1

Learn how to backup data and restore it on the same system for enhanced organizational benefits using Duplicity

What do you mean by server backup?

A server backup is a concept using which we can create a copy of most of the content which is on a server and store it completely on a different server. The destination to which the data is stored is usually referred to as the backup server and it is a standalone server whose only task is to store the copy of the content from different servers and provide this data to take the place of any which may be lost on other servers. The backup strategy used by servers varies depending on each organization’s needs and use cases.

Backup strategies are basically of 3 types, let us see what they are

- Full Backup: A full backup is when a complete copy of all files and folders is made. This is the most time-consuming backup to perform and may put a strain on your network if the backup is occurring on it but it’s also the quickest to restore from because all the files you need are contained in the same backup set.

- Incremental Backup: An incremental backup is one in which successive copies of the data contain only the portion that has changed when the preceding backup copy was made. When a full recovery is needed, the restoration process would require the last full backup plus all the incremental backups until the point of restoration when using this method.

- Differential Backup: A differential backup is a type of data backup that preserves data while saving only the difference in the data since the last full backup.

What do you mean by a server restore?

Server restore happens when we take data from a backup server and store it on any production server in the case that some or the entire data is lost. A good restore strategy is one where we can reduce the downtime that was caused due to data loss on any server.

In this article, we will discuss about Duplicity , a tool that proves to be quite helpful in both server backups and restoring solutions.

Why do we require Duplicity?

Here are some reasons why we require Duplicity in development scenarios:

To reduce RTO (Recovery time objective) which refers to the maximum amount of time the business can afford without access to the data or the application.

To reduce recovery point objective, or RPO, which refers to the amount of data you can afford to lose and effectively dictates how frequently you need to back up your data to avoid losing more.

Backup server data should also be encrypted and it should not be used without proper decryption.

On a large scale, server backup and restoration are important steps on an organizational level and they require proper strategy development for the entire process of backup and restoration flows.

What is Duplicity?

Duplicity is a server backup and restoration tool which implements a traditional backup scheme where the initial archive contains all the information (full backup) only the changed information is added going ahead. It is a free, open-source, and advanced command-line backup utility built on top of librsync and GnuPG. It produces digitally signed, versioned and encrypted tar volumes for storage on a local or remote computer. Duplicity supports many protocols for connecting to a file server including, ssh/scp, rsync, ftp, DropBox, Amazon S3, Google Docs, Google Drive etc.

Here's the link to the official docs.

Other alternatives which can be used in place of Duplicity are:

- Simple SCP

- Duplicati

- rsync

- Déjà Dup

- Back In Time

About this article

In this article, we will cover the process of a production server backup onto a backup server and restore the data back onto the existing production server. For this article, I have used two CentOS servers, one of which is the production server and the other will act as a backup server.

So let us get started!

Install duplicity

- For installation of Duplicity, copy-paste the following code snippet for

duplicity ruon your prod server:

yum install epel-release

yum install duplicity

duplicity --version

- This only needs to be installed on the prod server and not on the backup server. Here are some other installation guides

Establish a connection between servers

- Create a

ssh-keyon the prod server and add the.ssh/id_rsa.pubkey of the prod server to the.ssh/authorized_keysof the backup server to establish a securesshconnection:

ssh-keygen -t rsa -m PEM

Establish the directory structure

For this article, I am following a directory structure that can help you to understand the concepts of backup and restoration.

- There are two non-root users ,i.e.,

user1anduser2and the directory structure inside*/home*can be established by copy-pasting the following code snippet onto your console:`` . |-- user1 | |-- dir1 | |-- sub_dir1 | |-- file1 |-- dir2 |-- file2-- user2 |-- dir3 |-- sub_dir3 |-- file3 `-- dir4`-- file4

## Setup for encryption

Duplicity can use GunPG(GNU Privacy Guard) for the encryption of data . GnuPG allows you to encrypt and sign your data and communications; it features a versatile key management system along with access modules for all kinds of public key directories. GnuPG, also known as GPG, is a command-line tool with features for easy integration with other applications.

- Enter the following code into your console to reate GPG keys:

gpg2 --full-gen-key

- One can also go ahead with the default value of the prompts f required. You will also be setting a `PASSPHRASE` here. Enter the following snippet into your console to list the keys:

gpg --list-keys

- We will be using these keys for encryption and decryption.

### Create the backup

- We can create the backup for the entire `*/home* directory*` using the following code snippet:

PASSPHRASE='' duplicity --encrypt-key /home sftp://root@://root/test/home

- Here, we will define the source of the directory that needs to be backed up(/home) with the encryption and destination server and folder(<backup server>://root/test/home). This creates a full backup. Any subsequent running of this command will lead to creation of incremental faster backups.

- To list the backed up content on the prod server, run the following snippet:

PASSPHRASE='' duplicity list-current-files --encrypt-key sftp://root@://root/test/home

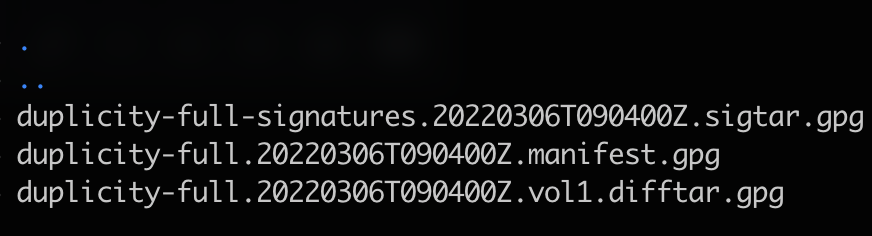

- If we list the content on the destination folder on the backup server which is `/root/test/home`, we will find the data that is stored/backed up is encrypted with `ls -la`. Refer to the image given below:

### Testing restoration scenarios

Here we will test and work around few scenarios by deleting data manually and trying to restore the same.

**Case 1 - Restoring `/home/user2` (Directory)**

- We will forcefully delete `/home/user2` over here.Now, even if we login to `user2` (`su -l user2`), we won't find any content it as shown below:

- To restore the deleted data, we can run the following snippet:

PASSPHRASE='' duplicity --encrypt-key --file-to-restore user2 sftp://root@://root/test/home /home/user2

- We can use `--file-to-restore` to carry out restoration and define the final location at the end as the restoration destination.

> Note a few points here:

> Parameter `--file-to-restore` should have a value that should be selected from `list-current-files` as the output.

> The restoration destination is a relative path. So, a good idea is to mention the absolute path there so as to avoid restoration at a wrong location.

> Now even if user gets deleted, created a user and restore the directory which will correctly point to `/home/<user>` along with the correct file permissions.

**Case 2 - Restoring `user1/dir1/sub_dir1` (sub-directory)**

- Here we will try to restore a sub-directory present inside a specific path. To restore, run the following snippet:

PASSPHRASE='' duplicity --encrypt-key --file-to-restore user1/dir1/sub_dir1 sftp://root@://root/test/home /home/user1/dir1/sub_dir1

**Case 3 - Restoring `user1/dir2/file2` (File)**

Here, we will try to restore a file present inside a specific path. To restore, run the following snippet:

PASSPHRASE='' duplicity --encrypt-key --file-to-restore user1/dir2/file2 sftp://root@://root/test/home /home/user1/dir2/file2

**Case 4 - Restoring the entire backed up folder**

There is no need of `--file-to-restore` to restore the backed-up folder. Duplicity is smart enough to know that the first parameter is the source and the last parameter is the destination. Run the following code:

PASSPHRASE='' duplicity --encrypt-key sftp://root@://root/test/home

Here we have worked and tested almost all the scenarios that one might enounter when backing up and restoring organisational data through this article.

### Some additional features of Duplicity:

**Deleting older backups: **

duplicity remove-older-than 5M sftp://<...>....

This deletes backup which is older than five months.

**Restoring files from backup created on previous backups**

duplicity -t 3D10h --file-to-restore ... ``` Here, we restore files as it was three days and ten hours ago

Conclusion

Duplicity provides for many advantages when it comes to storing organizational data. Some major advantages of using Duplicity because of which it can be used for backing up and restoring data are

It is easy to use.

It is an agent less service which means that there is no need for the installation of agents on the backup server.

It provides for encrypted and signed archives and backups due to GnuPG.

It has bandwidth and is space efficient as it uses incremental backup.

It supports standard file formats.

We can run our choice of remote protocol from

scp/ssh,ftp,rsync,HSI,WebDAV,Tahoe-LAFS, Amazon S3 etc.

I found Duplicity very useful as a solution for effective server backup and restoration techniques. I would insist you to give this a try and adopt it for the many benefits it offers.

I will also be writing an article on Duplicity-Part2 on backing up MySQL DBs and carrying out restoration on a completely new server. Hope to see you there!

So stay tuned and happy learning !! 😊😊